The Phi-4 tokenizer interestingly uses <|endoftext|> as the BOS (beginning of sentence), EOS (end of sentence) and PAD (padding) tokens. The main issue is the EOS token is wrong - it should be <|im_end|>. Otherwise, you will get <|im_end|><|endoftext|> in generations.

The padding token should be a designated pad token like in Llama (<|finetune_right_pad_id|>) or we can use an untrained token - for example we use <|dummy_87|>.

Using the incorrect pad token, can result in infinite generations because the pad token get masked during the loss calculations. Thus, we must use the correct pad token to not accidentally mask out the eos token, since in this case it is the same as the pad token.

The Phi-4 tokenizer always adds an assistant prompt - it should only do this if prompted by add_generation_prompt. Most LLM serving libraries expect non auto assistant additions, and this might cause issues during serving.

Multiple reports from Redditors shows our fixes do in fact work! For example, using the Hugging Face OpenLLM Leaderboard, we see our fixes and Llama-fication of Phi-4 does better or on par with Microsoft’s official Phi-4 model!

Reddit comments show our fixes make Phi-4 inference much better:

Exhibit #1: Someone’s internal multiple choice testing shows our fixed version does much better:

Exhibit #2: Telling Phi-4 to draw an ASCII art of a house:

We also ported Phi-4 directly into a Llama architecture! This allows finetuning to be much more accurate since QKV are unmerged, and gate/up are also unmerged. This allows LoRA finetuning to learn separate A matrices for each. View the uploads are here.

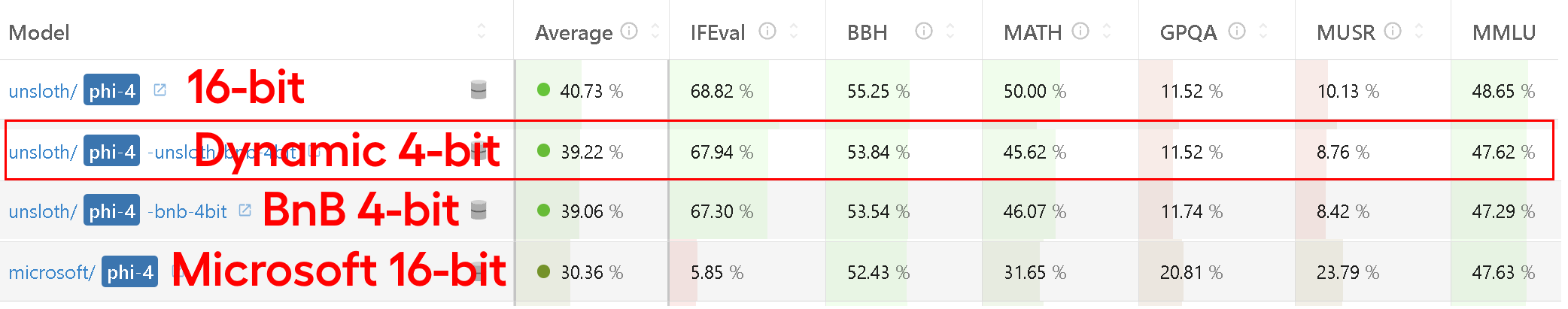

We uploaded 4-bit bitsandbytes pre-quantized models for 4x faster downloading, however, Unsloth's Dynamic 4-bit quant shows we mustn't quantize all layers. This results in largely increased accuracy while only using 10% more VRAM.

A great example of our dynamic quants' effectiveness is through submitting our dynamic 4-bit quants to Hugging Face's OpenLLM Leaderboard. Our 4-bit dynamic quant scored nearly as high as our 16-bit version—and well above standard Bnb 4-bit and Microsoft's official 16-bit model, especially for MMLU.

We uploaded our dynamic 4-bit quants which leave some layers in 16-bit here.

See the activation and weight error analysis plots are below: